Memory hierarchy

The instructions and data that the CPU processes are taken from memory. Memory comes in several layers.

Registers - the top layer, it is high speed storage cells (can contain 32-64 bit data)

Caches - If data can not be found in registers it will be looked in the next level, which is cache

L1 cache the fastes an smallest (usually on CPU chip) 32-256 KB

L2 cache, if the needed data not in L1, CPU is trying to find it in L2, it can be megabytes in size (4MB)

L3 cache, it is a bit more far away, around 32MB

RAM - If the needed data not in the hardware caches then TLB (Translation Lookaside Buffer) will be checked, after the RAM

TLB - cache of recently accessed addresses

Disk - If the address is not in RAM, then a page fault occurs and the data is retrieved from the hard disk.

A page fault is a request to load a 4KB data page from disk.

The way demand paging works is that the kernel only loads a few pages at

a time into real memory. When the CPU is ready for another page, it

looks at the RAM. If it cannot find it there, a page fault occurs, and

this signals the kernel to bring more pages into RAM from disk.

If the CPU is waiting data from real memory, the CPU is still considered

as being in busy state. If data is needed from disk then CPU is in I/O

wait state.

-------------------------------------

L2 Cache and performance:

L2 cache is a fast memory which stores copies of the data from the most frequently used main memory locations.

First picture shows a Power5 system, second picture Power6 (or Power7) system.

In

Power5 systems, there is a single L2 cache on a processor chip which is

shared by both the cores on the chip. In later servers (Power6 and

Power7) they have separate L2 cache for each core on a chip. The

partition’s performance depends on how efficiently it is using the

processor cache.

If L2 cache interference with other partitions

is minimal, performance is much better for the partition. Sharing L2

cache with other partitions means there is a chance of processor’s most

frequently accessed data will be flushed out of the cache and accessing

from L3 cache or from main memory will take more time.

-------------------------------------

Paging Space (also called Swap space)

The RAM and the paging space are divided into 4 KB sections called page

frames. (A page is a unit of virtual memory that holds 4 KB of data.)

When the system needs more RAM, page frames of information are moved out

of RAM and onto the hard disk. This is called paging out. When those

page frames of information are needed again, they are taken from the

hard disk and moved back into the RAM. This is called paging in.

When the system spends more time shuffling page frames in and out of RAM instead of doing useful work, the system is thrashing.

When the amount of available paging space falls below a threshold,

called the paging space warning level, the system sends all the

processes (except the kernel processes) a SIGDANGER signal. This signal

tells the processes to terminate gracefully. When the amount of empty

paging space falls further below a second threshold, called the paging

space kill level, the system sends a SIGKILL signal to processes that

are using the most paging space. (terminate nongracefully)

When AIX is installed, it automatically creates paging space on the

installation disk, which is usually the hard disk hdisk0. The name of

this paging space is always hd6. The file /etc/swapspaces contains a

list of the paging space areas that will be activated at system startup.

swapon is a term from the days before page frames were used. At that

time, around 1982, AIX swapped entire programs out of RAM and onto the

hard disk. Today, a portion of the program is left in RAM, and the rest

is paged out of the program onto the hard disk. The term swapon stuck,

so today, we sometimes refer to paging out and paging in as swapping

Once you page out a computation page, it continues to take up space on

the paging file as long as the process exist, even if the page is

subsequently paged back in. In general you should avoid paging at all.

How much paging space do you need on your system? What is the rule of thumb?

Database administrators usually like to request the highest number of

everything and might instruct you to double the amount of paging space

as your RAM (the old rule of thumb). Generally speaking, if my system

has greater than 4GB of RAM, I usually like to create a one-to-one ratio

of paging space versus RAM. Monitor your system frequently after going

live. If you see that you are never really approaching 50 percent of

paging space utilization, don't add the space.

The number and types of applications will dictate the amount of paging

space needed. Many sizing “rules of thumb” have been published, but the

only way to correctly size your machine's paging space is to monitor the

amount of paging activity.

Tips for paging space:

- Only 1 paging space per disk

- Use disks with the least activity

- Paging spcaces should the same size

- Do not extend a paging spcae to multiple PV's

Ideally, there should be several paging spaces of equal size each on

different physical volumes. The paging space is allocated in a round

robin manner and will use all paging areas equally. If you have two

paging areas on one disk, then you are no longer spreading the activity

across several disks.Because of the round robin technique that is used,

if they are not the same size, then the paging space usage will not be

balanced.

bootinfo -r displays the real memory in kilobytes (this also works: lsattr -El sys0 -a realmem)

lscfg -vp |grep -p DIMM displays the memory DIMM

lsattr -El sys0 -a realmem (list attributes) see how much real memory you have

ps aux | sort +4 -n lists how much mem is used by the processes

svmon -P | grep -p <pid> you can see how much paging spce a process is using

svmon -P -O sortseg=pgsp shows paging space usage of processes

mkps -s 4 -n -a rootvg hdisk0 creates a paging space (give the name automatically:paging00)

-n activates it immediately,

-a it will be activated at next restart as well (adds it to /etc/swapspaces)

-s size 4 lp

lsps -a list all paging spaces and the usage of a paging space

lsps -s summary of all paging spaces combined (all the paging spaces are added together)

chps -s 3 hd6 dynamically increase the size of a paging space with 3 lps

chps -d 1 paging00 dynamically decrease the size of a paging space with 1 lp (it will create a temporary paging space)

/etc/swapspaces contains a list of the paging space areas

vmstat -s

smitty mkps adding paging space

smitty chps changing paging space

swapon /dev/paging02 dynamically activate, or bring online, a paging space (or smitty pgsp)

swapoff /dev/paging03 deactivate a paging space

------------------------------

removing a paging space:

swapoff /dev/paging03 deactivate a paging space (the /dev is needed)

rmps paging03 removes a paging space (the /dev is not needed)

------------------------------

For flushing the paging space:

(it shows high percentage, but actually nothing is using it)

1. chps -d 1 hd6 it will decrease the size of the paging spave by 1 lp (it will create a temp. paging space, copy the conntent...)

(if not enough space in the vg, it will not do that)

2. chps -s 1 hd6 increase paging space to its original size

------------------------------

Fork:

When there is a message regarding cannot fork... it is probably caused by low paging space

When a process calls fork(), the operating system creates a child process of the calling process.

The child process created by fork() is a sort of replica of the calling

process. Some server processes, or daemons, call fork() a few times to

create more than one instance of themselves. An example of this is a web

server that pre-forks so it can handle a certain number of incoming

connections without having to fork() the moment they arrive.

------------------------------

Virtual Memory Management:

(this section also applicable to ioo, no, nfso)

vmo -a|egrep "minperm%|maxperm%|maxclient%|lru_file_repage|strict_maxclient|strict_maxperm|minfree|maxfree"

root@aix04: / # vmo -a |grep maxclient

maxclient% = 8

strict_maxclient = 1

root@aix1: /root # vmo -L

NAME CUR DEF BOOT MIN MAX UNIT TYPE

DEPENDENCIES

--------------------------------------------------------------------------------

cpu_scale_memp 8 8 8 1 64 B

--------------------------------------------------------------------------------

data_stagger_interval n/a 161 161 0 4K-1 4KB pages D

lgpg_regions

--------------------------------------------------------------------------------

D = Dynamic: can be freely changed

B = Bosboot: can only be changed using bosboot and reboot

S = Static: the parameter can never be changed

R = Reboot: the parameter can only be changed during boot

/etc/tunables/nextboot <--values to be applied to the next reboot. This file is automatically applied at boot time.

/etc/tunables/lastboot <--automatically generated at boot

time. It contains the parameters, with their values after the last boot.

/etc/tunables/lasboot.log <--contains the logging of the creation

of the lastboot file, that is, any parameter change made is logged

vmo -o maxperm%=80 <--sets to 80

vmo -p -o maxperm%=80 -o maxclient%=80 <-- sets maxperm% and maxclient% to 80

(-p: sets both current and reboot values (updates current value and /etc/tunables/nextboot)

vmo -r -o lgpg_size=0 -o lgpg_regions=0 <--sets only in nextboot file,so after reboot will be activated

------------------------------

SAP Note 973227 recommendations:

minperm% = 3

maxperm% = 90

maxclient% = 90

lru_file_repage = 0

strict_maxclient =1

strict_maxperm = 0

minfree = 960

maxfree = 1088

--------------------------------------

--------------------------------------

--------------------------------------

AiX for System Administrators

Article Practical Guide to AIX System for System Administrators

Friday, September 20, 2013

File System iO AiX Has Special Features

I/O - AIO, DIO, CIO, RAW

Filesystem I/O

AIX has special features to enhance the performance of of filesystem I/O for general-purpose file access. These features include read ahead, write behind and I/O buffering. Oracle employs its own I/O optimization and buffering that in most cases are redundant to those provided by AIX file systems. Oracle uses buffer cache management (data blocks buffered to shared memory), and AIX uses virtual memory management (data buffered in virtual memory). If both try to manage data caching, it result in wasted memory, cpu and suboptimal performance.

Generally better to allow Oracle to manage I/O buffering, because it has information regarding the context, so can better optimze memory usage.

Asynchronous I/O

A read can be considered to be synchronous if a disk operation is required to read the data into memory. In this case, application processing cannot continue until the I/O operation is complete. Asynchronous I/O allows applications to initate read or write operations without being blocked, since all I/O operations are done in background. This can improve performance, because I/O operations and appl. processing can run simultaenously.

Asynchronous I/O on filesystems is handled through a kernel process called: aioserver (in this case each I/O is handled by a single kproc)

The minimum number of servers (aioserver) configured, when asynchronous I/O is enabled, is 1 (minservers). Additional aioservers are started when more asynchronous I/O is requested. Tha maximum number of servers is controlled by maxservers. aioserver kernel threads do not go away once started, until the system reboots (so with "ps -k" we can see what was the max number of aio servers that were needed concurrently at some time in the past)

How many should you configure?

The rule of thumb is to set the maximum number of servers (maxservers) equal to ten times the amount of disk or ten times the amount of processors. MinServers would be set at half of this amount. Other than having some more kernel processes hanging out that really don't get used (using a small amount of kernel memory), there really is little risk in oversizing the amount of MaxServers, so don't be afraid to bump it up.

root@aix31: / # lsattr -El aio0

autoconfig defined STATE to be configured at system restart True

fastpath enable State of fast path True

kprocprio 39 Server PRIORITY True

maxreqs 4096 Maximum number of REQUESTS True

maxservers 10 MAXIMUM number of servers per cpu True

minservers 1 MINIMUM number of servers True

maxreqs <-max number of aio requests that can be outstanding at one time

maxservers <-if you have 4 CPU then tha max count of aio kernel threds would be 40

minservers <-this amount will start at boot (this is not per CPU)

Oracle takes full advantage of Asynchronous I/O provided by AIX, resulting in faster database access.

on AIX 6.1: ioo is handling aio (ioo -a)

mkdev -l aio0 enables the AIO device driver (smitty aio)

aioo -a shows the value of minservers, maxservers...(or lsattr -El aio0)

chdev -l aio0 -a maxservers='30' changes the maxserver value to 30 (it will show the new value, butit will be active only after reboot)

ps -k | grep aio | wc -l shows how many aio servers are running

(these are not necessarily are in use, maybe many of them are just hanging there)

pstat -a shows the asynchronous I/O servers by name

iostat -AQ 2 2 it will show if any aio is in use by filesystems

iostat -AQ 1 | grep -v " 0 " it will omit the empty lines

it will show which filesystems are active in regard to aio.

under the count column will show the specified fs requested how much aio...

(it is good to see which fs is aio intensive)

root@aix10: /root # ps -kf | grep aio <--it will show the accumulated CPU time of each aio process

root 127176 1 0 Mar 24 - 15:07 aioserver

root 131156 1 0 Mar 24 - 14:40 aioserver

root 139366 1 0 Mar 24 - 14:51 aioserver

root 151650 1 0 Mar 24 - 14:02 aioserver

It is good to compare these times of each process to see if more aioservers are needed or not. If the times are identical (only few minutes differences) it means all of them are used maximally so more precesses are needed.

------------------------------------

iostat -A reports back asynchronous I/O statistics

aio: avgc avfc maxgc maxfc maxreqs avg-cpu: % user % sys % idle % iowait

10.2 0.0 5 0 4096 20.6 4.5 64.7 10.3

avgc: This reports back the average global asynchronous I/O request per second of the interval you specified.

avfc: This reports back the average fastpath request count per second for your interval.

------------------------------------

Changing aio parameters:

You can set the values online, with no interruption of service – BUT – they will not take affect until the next time the kernel is booted

1. lsattr -El aio0 <-- check current setting for aio0 device

2. chdev -l aio0 -a maxreqs=<value> -P <-- set the value of maxreqs permanently for next reboot

3. restart server

------------------------------------

0509-036 Cannot load program aioo because...

if you receive this:

root@aix30: / # aioo -a

exec(): 0509-036 Cannot load program aioo because of the following errors:

0509-130 Symbol resolution failed for aioo because:

....

probably aioserver is in defined state:

root@aix30: / # lsattr -El aio0

autoconfig defined STATE to be configured at system restart True

You should make it available with: mkdev -l aio0

(and also change it for future restarts: chdev -l aio0 -a autoconfig=available, or with 'smitty aio')

------------------------------------

DIRECT I/O (DIO)

Direct I/O is an alternative non-caching policy which causes file data to be transferred directly to the disk from the application or directly from the disk to the application without going through the VMM file cache.

Direct I/O reads cause synchrounous reads from the disk whereas with the normal cached policy the reads may be satisfied from the cache. This can result in poor performance if the data was likely to be in memory under the normal caching policy.

Direct I/O can be enabled: mount -o dio

If JFS2 DIO or CIO options are active, no filesystem cache is being used for Oracle .dbf and/or online redo logs files.

Databases normally manage data caching at application level, so the do not need the filesystem to implement this service for them. The use of the file buffer cache result in undesirable overhead, since data is first moved from the disk to the file buffer cache and from there to the application buffer. This "double-copying" of data results in additional CPU and memory consumption.

JFS2 supports DIO as well CIO. The CIO model is built on top of the DIO. For JFS2 based environments, CIO should always be used (instead of DIO) for those situations where the bypass of filesystem cache is appropriate.

JFS DIO should only be used:

On Oracle data (.dbf) files, where DB_BLOCK_SIZE is 4k or graeter. (Use of JFS DIO on any other files (e.g redo logs, control files) is likely to result in a severe performance penalty.

------------------------------

CONCURRENT I/O (CIO)

The inode lock imposes write serialization at the file level. JFS2 (by default) employs serialization mechanisms to ensure the integrity of data being updated. An inode lock is used to ensure that there is at most one outstanding write I/O to a file at any point in time, reads are not allowed because they may result in reading stale data.

Oracle implements its own I/O serialization mechanisms to ensure data integrity, so JFS2 offers Concurrent I/O option. Under CIO, multiple threads may simulteanously perform reads and writes on a shared file. Applications that do not enforce serialization should not use CIO (data corruption or perf. issues can occure).

CIO invokes direct I/O, so it has all the other performance considerations associated with direct I/O. With standard direct I/O, inodes are locked to prevent a condition where multiple threads might try to change the consults of a file simultaneously. Concurrent I/O bypasses the inode lock, which allows multiple threads to read and write data concurrently to the same file.

CIO includes the performance benefits previously available with DIO, plus the elimination of the contention on the inode lock.

Concurrent I/O should only be used:

Oracle .dbf files, online redo logs and/or control files.

When used for online redo logs or control files, these files should be isolated in their own JFS2 filesystems, with agblksize= 512.

Filesystems containing .dbf files, should be created with:

-agblksize=2048 if DB_BLOCK_SIZE=2k

-agblksize=4096 if DB_BLOCK_SIZE>=4k

(Failure to implement these agblksize values is likely to result in a severe performance penalty.)

Do not under aby circumstances, use CIO mount option for the filesystem containing the Oracle binaries (!!!).

Additionaly, do not use DIO/CIO options for filesystems containing archive logs or any other files do not discussed here.

Applications that use raw logical volumes fo data storage don't encounter inode lock contention since they don't access files.

fsfastpath should be enabled to initiate aio requestes directly to LVM or disk, for maximum performance (aioo -a)

------------------------------

RAW I/O

When using raw devices with Oracle, the devices are either raw logical volumes or raw disks. When using raw disks, the LVM layer is bypassed. The use of raw lv's is recommended for Oracle data files, unless ASM is used. ASM has the capability to create data files, which do not need to be mapped directly to disks. With ASM, using raw disks is preferred.

Firmware Adapter System and Firmware lsmcode

FIRMWARE:

ADAPTER/SYSTEM FIRMWARE:

lsmcode -A displays microcode (same as firmware) level information for all supported devices

(Firmware: Software that has been written onto read-only memory (ROM))

lsmcode -c shows firmware level for system, processor

invscout:

it helps to show which firmware (microcode) should be updated:

1. download: http://public.dhe.ibm.com/software/server/firmware/catalog.mic

2. copy on the server to: /var/adm/invscout/microcode

3. run: invscout (it will collect data and creates: /var/adm/invscout/<hostname>.mup)

4. upload <hostanme>.mup to: http://www14.software.ibm.com/webapp/set2/mds/fetch?page=mdsUpload.html

-----------------------------------------------------

SYSTEM FIRMWARE UPDATE:

(update is concurrent, upgrade is disruptive)

1. download from FLRT the files

2. copy to NIM server (NFS export) the files or put onto an FTP server (xml and rpm was in it for me)

3. makes sure there are no deconfigured cpu/ram in the server, or broken hardware devices

4. On HMC -> LIC (Licensed Internal Code) Maintenance -> LIC Updates -> Channge LIC for the current release

(if you want to do an upgrade (not update) choose: Upgrade Licensed Internal Code)

5. Choose the machine -> Channge LIC (Licensed Internal Code) wizard

6. FTP site:

FTP site:10.20.10.10

User ID: root

Passw: <root pw>

Change Directory: set to the uploaded dir

7. follow the wizard (next, next..), about after 20-30 minutes will be done

"The repository does not contain any applicable upgrade updates HSCF0050" or ..."The selected repository does not contain any new updates."

It can happen if ftp user was not created by official AIX script: /usr/samples/tcpip/anon.ftp

Create ftp user with this and try again.

-----------------------------------------------------

ADAPTER FIRMWARE LEVEL:

For FC adapter:

root@aix1: /root # lscfg -vpl fcs0

fcs0 P2-I2 FC Adapter

Part Number.................09P5079

EC Level....................A

Serial Number...............1C2120A894

Manufacturer................001C

Customer Card ID Number.....2765 <--it shows the feature code (could be like this: Feature Code/Marketing ID...5704)

FRU Number..................09P5080 <--identifies the adapter

Network Address.............10000000C92BC1EF

ROS Level and ID............02C039D0

Device Specific.(Z0)........2002606D

...

Device Specific.(Z9)........CS3.93A0 <--this is the same as ZB

Device Specific.(ZA)........C1D3.93A0

Device Specific.(ZB)........C2D3.93A0 <--to verify the firmware level ignore the first 3 characters in the ZB field (3.93A0)

Device Specific.(ZC)........00000000

Hardware Location Code......P2-I2

For Network adapter:

lscfg -vpl entX --> FRU line

ROM Level.(alterable).......GOL021 <--it is showing the firmware level

For Internal Disks:

lscfg -vpl hdiskX

Manufacturer................IBM

Machine Type and Model......HUS153014VLS300

Firmware (Microcode) update should be started from FLRT (Fix Level Recommendatio Tool).

After selecting the system, it will be possible to choose devices to do an update, and read description, how to do it.

Check with Google the "FRU Number" to get the FC Code (this is needed to get the correct files on FLRT)

Basic steps for firmware upgrade:

0. check if /etc/microcode dir exists

1. download from FLRT to a temp dir the needed rpm package.

2. make the rpm package available to the system:

cd to the copied temp dir

rpm -ihv --ignoreos --force SAS15K300-A428-AIX.rpm

3. check:

rpm -qa

cd /etc/microcode --> ls -l will show the new microcede

compare filesize within the document

compare checksum within document: sum <filename> (sum /usr/lib/microcode/df1000fd-0002.271304)

4. diag -d fcs0 -T download <--it will install (download) the microcode

Description about: "M", "L", "C", "P" when choosing the microcode:

"M" is the most recent level of microcode found on the source.

It is later than the level of microcode currently installed on the adapter.

"L" identifies levels of microcode that are later than the level of microcode currently installed on the adapter.

Multiple later images are listed in descending order.

"C" identifies the level of microcode currently installed on the adapter.

"P" identifies levels of microcode that are previous to the level of microcode currently installed on the adapter.

Multiple previous images are listed in the descending order.

5. lsmcode -A <--for checking

To Back level the firmware

diag -d fcsX -T "download -f -l previous"

ADAPTER/SYSTEM FIRMWARE:

lsmcode -A displays microcode (same as firmware) level information for all supported devices

(Firmware: Software that has been written onto read-only memory (ROM))

lsmcode -c shows firmware level for system, processor

invscout:

it helps to show which firmware (microcode) should be updated:

1. download: http://public.dhe.ibm.com/software/server/firmware/catalog.mic

2. copy on the server to: /var/adm/invscout/microcode

3. run: invscout (it will collect data and creates: /var/adm/invscout/<hostname>.mup)

4. upload <hostanme>.mup to: http://www14.software.ibm.com/webapp/set2/mds/fetch?page=mdsUpload.html

-----------------------------------------------------

SYSTEM FIRMWARE UPDATE:

(update is concurrent, upgrade is disruptive)

1. download from FLRT the files

2. copy to NIM server (NFS export) the files or put onto an FTP server (xml and rpm was in it for me)

3. makes sure there are no deconfigured cpu/ram in the server, or broken hardware devices

4. On HMC -> LIC (Licensed Internal Code) Maintenance -> LIC Updates -> Channge LIC for the current release

(if you want to do an upgrade (not update) choose: Upgrade Licensed Internal Code)

5. Choose the machine -> Channge LIC (Licensed Internal Code) wizard

6. FTP site:

FTP site:10.20.10.10

User ID: root

Passw: <root pw>

Change Directory: set to the uploaded dir

7. follow the wizard (next, next..), about after 20-30 minutes will be done

"The repository does not contain any applicable upgrade updates HSCF0050" or ..."The selected repository does not contain any new updates."

It can happen if ftp user was not created by official AIX script: /usr/samples/tcpip/anon.ftp

Create ftp user with this and try again.

-----------------------------------------------------

ADAPTER FIRMWARE LEVEL:

For FC adapter:

root@aix1: /root # lscfg -vpl fcs0

fcs0 P2-I2 FC Adapter

Part Number.................09P5079

EC Level....................A

Serial Number...............1C2120A894

Manufacturer................001C

Customer Card ID Number.....2765 <--it shows the feature code (could be like this: Feature Code/Marketing ID...5704)

FRU Number..................09P5080 <--identifies the adapter

Network Address.............10000000C92BC1EF

ROS Level and ID............02C039D0

Device Specific.(Z0)........2002606D

...

Device Specific.(Z9)........CS3.93A0 <--this is the same as ZB

Device Specific.(ZA)........C1D3.93A0

Device Specific.(ZB)........C2D3.93A0 <--to verify the firmware level ignore the first 3 characters in the ZB field (3.93A0)

Device Specific.(ZC)........00000000

Hardware Location Code......P2-I2

For Network adapter:

lscfg -vpl entX --> FRU line

ROM Level.(alterable).......GOL021 <--it is showing the firmware level

For Internal Disks:

lscfg -vpl hdiskX

Manufacturer................IBM

Machine Type and Model......HUS153014VLS300

Firmware (Microcode) update should be started from FLRT (Fix Level Recommendatio Tool).

After selecting the system, it will be possible to choose devices to do an update, and read description, how to do it.

Check with Google the "FRU Number" to get the FC Code (this is needed to get the correct files on FLRT)

Basic steps for firmware upgrade:

0. check if /etc/microcode dir exists

1. download from FLRT to a temp dir the needed rpm package.

2. make the rpm package available to the system:

cd to the copied temp dir

rpm -ihv --ignoreos --force SAS15K300-A428-AIX.rpm

3. check:

rpm -qa

cd /etc/microcode --> ls -l will show the new microcede

compare filesize within the document

compare checksum within document: sum <filename> (sum /usr/lib/microcode/df1000fd-0002.271304)

4. diag -d fcs0 -T download <--it will install (download) the microcode

Description about: "M", "L", "C", "P" when choosing the microcode:

"M" is the most recent level of microcode found on the source.

It is later than the level of microcode currently installed on the adapter.

"L" identifies levels of microcode that are later than the level of microcode currently installed on the adapter.

Multiple later images are listed in descending order.

"C" identifies the level of microcode currently installed on the adapter.

"P" identifies levels of microcode that are previous to the level of microcode currently installed on the adapter.

Multiple previous images are listed in the descending order.

5. lsmcode -A <--for checking

To Back level the firmware

diag -d fcsX -T "download -f -l previous"

The errdemon is started during system initialization and continuously monitors

Errpt - Diag - Alog - Syslogd

ERROR LOGGING:

The errdemon is started during system initialization and continuously monitors the special file /dev/error for new entries sent by either the kernel or by applications. The label of each new entry is checked against the contents of the Error Record Template Repository, and if a match is found, additional information about the system environment or hardware status is added. A memory buffer is set by the errdemon process, and newly arrived entries are put into the buffer before they are written to the log to minimize the possibility of a lost entry. The errlog file is a circular log, storing as many entries as can fit within its defined size, the default is /var/adm/ras/errlog and it is in binary format

The name and size of the error log file and the size of the memory buffer may be viewed with the errdemon command:

# /usr/lib/errdemon -l

Log File /var/adm/ras/errlog

Log Size 1048576 bytes

Memory Buffer Size 32768 bytes

------------------------------

/usr/lib/errdemon restarts the errdemon program

/usr/lib/errstop stops the error logging daemon initiated by the errdemon program

/usr/lib/errdemon -l shows information about the error log file (path, size)

/usr/lib/errdemon -s 2000000 changes the maximum size of the error log file

errpt retrieves the entries in the error log

errpt -a -j AA8AB241 shows detailed info about the error (with -j, the error id can be specified)

errpt -s 1122164405 -e 11231000405

shows error log in a time period (-s start date, -e end date)

errpt -d H shows hardware errors (errpt -d S: software errors)

Error Classes:

H: Hardware

S: Software

O: Operator

U: Undetermined

Error Type:

P: Permanent - unable to recover from error condition

Pending - it may be unavailable soon due to many errors

Performance - the performance of the device or component has degraded to below an acceptable level

T: Temporary - recovered from condition after several attempts

I: Informational

U: Unknown - Severity of the error cannot be determined

Types of Disk Errors:

DISK_ERR1: Disk should be replaced it was used heavily

DISK_ERR2: caused by loss of electrical power

DISK_ERR3: caused by loss of electrical power

DISK_ERR4: indicates bad blocks on the disk (if more than one entry in a week replace disk)

errclear deletes entries from the error log (smitty errclear)

errclear 7 deletes entries older than 7 days (0 clears all messages)

errclear -j CB4A951F 0 deletes all the messages with the specified ID

errlogger log operator messages to the system error log

(errlogger "This is a test message")

------------------------------

Mail notification via errpt and errnotify

AIX has an Error Notification object class in the Object Data Manager (ODM). An errnotify object is a "hook" into the error logging facility that causes the execution of a program whenever an error message is recorded. By default, there are a number of predefined errnotify entries, and each time an error is logged via errlog, it checks if that error entry matches the criteria of any of the Error Notification objects.

0. make sure mail sending is working correctly from the server

1. create a text file (i.e. /tmp/errnotify.txt), which will be added to ODM

Add the below lines if you want notifications on all kind of errpt entries:

errnotify:

en_name = "mail_all_errlog"

en_persistenceflg = 1

en_method = "/usr/bin/errpt -a -l $1 | mail -s \"errpt $9 on `hostname`\" aix4adm@gmail.com" <--specify here the email addres

Add the below lines if you want notifications on permanent hardware entries only:

errnotify:

en_name = "mail_perm_hw"

en_class = H

en_persistenceflg = 1

en_type = PERM

en_method = "/usr/bin/errpt -a -l $1 | mail -s \"Permanent hardware errpt $9 on `hostname`\" aix4adm@gmail.com"

2. root@bb_lpar: / # odmadd /tmp/errnotify.txt <--add the content of the text file to ODM:

3. root@bb_lpar: / # odmget -q en_name=mail_all_errlog errnotify <--check if it is added successfully

4. root@bb_lpar: / # errlogger "This is a test message" <--check mail notification with a test errpt entry

You can delete the addded errnotify object if it is not needed anymore:

root@bb_lpar: / # odmdelete -q 'en_name=mail_all_errlog' -o errnotify

0518-307 odmdelete: 1 objects deleted.

(source: http://www.kristijan.org/2012/06/error-report-mail-notifications-with-errnotify/)

--------------------------------------------------------------------------------------------

DIAGRPT: (DIAG logs reporter)

diagrpt Displays previous diagnostic results

cd /usr/lpp/diag*/bin

./diagrpt -r Displays the short version of the Diagnostic Event Log

./diagrpt -a Displays the long version of the Diagnostic Event Log

--------------------------------------------------------------------------------------------

ALOG:

/var/adm/ras this directory contains the master log files (alog command can read these files)

e.g. /var/adm/ras/conslog

alog -L shows what kind of logs there are (console, boot, bosinst...), these can be used by: alog -of ...

alog -Lt <type> shows the attibute of a type (console, boot ...): size, path to logfile...

alog -ot console lists of those errors which are on the console

alog -ot boot shows the bootlog

alog -ot lvmcfg lvm log file, shows what lvm commands were used (alog -ot lvmt: shows lvm commands and libs)

--------------------------------------------------------------------------------------------

syslogd:

/etc/syslog.conf should be editied to set the syslog:

the log file should exists, so if not, touch it.

mail.debug /var/log/mail rotate size 100k files 4 # 4 files, 100kB each

user.debug /var/log/user rotate files 12 time 1m # 12 files, monthly rotate

kern.debug /var/log/kern rotate files 12 time 1m compress # 12 files, monthly rotate, compress

lssrc -ls syslogd shows syslogd specifics

(after modifying syslogd.conf: refresh -s syslogd)

ERROR LOGGING:

The errdemon is started during system initialization and continuously monitors the special file /dev/error for new entries sent by either the kernel or by applications. The label of each new entry is checked against the contents of the Error Record Template Repository, and if a match is found, additional information about the system environment or hardware status is added. A memory buffer is set by the errdemon process, and newly arrived entries are put into the buffer before they are written to the log to minimize the possibility of a lost entry. The errlog file is a circular log, storing as many entries as can fit within its defined size, the default is /var/adm/ras/errlog and it is in binary format

The name and size of the error log file and the size of the memory buffer may be viewed with the errdemon command:

# /usr/lib/errdemon -l

Log File /var/adm/ras/errlog

Log Size 1048576 bytes

Memory Buffer Size 32768 bytes

------------------------------

/usr/lib/errdemon restarts the errdemon program

/usr/lib/errstop stops the error logging daemon initiated by the errdemon program

/usr/lib/errdemon -l shows information about the error log file (path, size)

/usr/lib/errdemon -s 2000000 changes the maximum size of the error log file

errpt retrieves the entries in the error log

errpt -a -j AA8AB241 shows detailed info about the error (with -j, the error id can be specified)

errpt -s 1122164405 -e 11231000405

shows error log in a time period (-s start date, -e end date)

errpt -d H shows hardware errors (errpt -d S: software errors)

Error Classes:

H: Hardware

S: Software

O: Operator

U: Undetermined

Error Type:

P: Permanent - unable to recover from error condition

Pending - it may be unavailable soon due to many errors

Performance - the performance of the device or component has degraded to below an acceptable level

T: Temporary - recovered from condition after several attempts

I: Informational

U: Unknown - Severity of the error cannot be determined

Types of Disk Errors:

DISK_ERR1: Disk should be replaced it was used heavily

DISK_ERR2: caused by loss of electrical power

DISK_ERR3: caused by loss of electrical power

DISK_ERR4: indicates bad blocks on the disk (if more than one entry in a week replace disk)

errclear deletes entries from the error log (smitty errclear)

errclear 7 deletes entries older than 7 days (0 clears all messages)

errclear -j CB4A951F 0 deletes all the messages with the specified ID

errlogger log operator messages to the system error log

(errlogger "This is a test message")

------------------------------

Mail notification via errpt and errnotify

AIX has an Error Notification object class in the Object Data Manager (ODM). An errnotify object is a "hook" into the error logging facility that causes the execution of a program whenever an error message is recorded. By default, there are a number of predefined errnotify entries, and each time an error is logged via errlog, it checks if that error entry matches the criteria of any of the Error Notification objects.

0. make sure mail sending is working correctly from the server

1. create a text file (i.e. /tmp/errnotify.txt), which will be added to ODM

Add the below lines if you want notifications on all kind of errpt entries:

errnotify:

en_name = "mail_all_errlog"

en_persistenceflg = 1

en_method = "/usr/bin/errpt -a -l $1 | mail -s \"errpt $9 on `hostname`\" aix4adm@gmail.com" <--specify here the email addres

Add the below lines if you want notifications on permanent hardware entries only:

errnotify:

en_name = "mail_perm_hw"

en_class = H

en_persistenceflg = 1

en_type = PERM

en_method = "/usr/bin/errpt -a -l $1 | mail -s \"Permanent hardware errpt $9 on `hostname`\" aix4adm@gmail.com"

2. root@bb_lpar: / # odmadd /tmp/errnotify.txt <--add the content of the text file to ODM:

3. root@bb_lpar: / # odmget -q en_name=mail_all_errlog errnotify <--check if it is added successfully

4. root@bb_lpar: / # errlogger "This is a test message" <--check mail notification with a test errpt entry

You can delete the addded errnotify object if it is not needed anymore:

root@bb_lpar: / # odmdelete -q 'en_name=mail_all_errlog' -o errnotify

0518-307 odmdelete: 1 objects deleted.

(source: http://www.kristijan.org/2012/06/error-report-mail-notifications-with-errnotify/)

--------------------------------------------------------------------------------------------

DIAGRPT: (DIAG logs reporter)

diagrpt Displays previous diagnostic results

cd /usr/lpp/diag*/bin

./diagrpt -r Displays the short version of the Diagnostic Event Log

./diagrpt -a Displays the long version of the Diagnostic Event Log

--------------------------------------------------------------------------------------------

ALOG:

/var/adm/ras this directory contains the master log files (alog command can read these files)

e.g. /var/adm/ras/conslog

alog -L shows what kind of logs there are (console, boot, bosinst...), these can be used by: alog -of ...

alog -Lt <type> shows the attibute of a type (console, boot ...): size, path to logfile...

alog -ot console lists of those errors which are on the console

alog -ot boot shows the bootlog

alog -ot lvmcfg lvm log file, shows what lvm commands were used (alog -ot lvmt: shows lvm commands and libs)

--------------------------------------------------------------------------------------------

syslogd:

/etc/syslog.conf should be editied to set the syslog:

the log file should exists, so if not, touch it.

mail.debug /var/log/mail rotate size 100k files 4 # 4 files, 100kB each

user.debug /var/log/user rotate files 12 time 1m # 12 files, monthly rotate

kern.debug /var/log/kern rotate files 12 time 1m compress # 12 files, monthly rotate, compress

lssrc -ls syslogd shows syslogd specifics

(after modifying syslogd.conf: refresh -s syslogd)

AIX generates a system dump when a severe error occurs

Dump - Core

AIX generates a system dump when a severe error occurs. A system dump creates a picture of the system's memory contents. If the AIX kernel crashes kernel data is written to the primary dump device. After a kernel crash AIX must be rebooted. During the next boot, the dump is copied into a dump directory (default is /var/adm/ras). The dump file name is vmcore.x (x indicates a number, e.g. vmcore.0)

When installing the operating system, the dump device is automatically configured. By default, the primary device is /dev/hd6, which is a paging logical volume, and the secondary device is /dev/sysdumpnull.

A rule of thumb is when a dump is created, it is about 1/4 of the size of real memory. The command "sysdumpdev -e" will also provide an estimate of the dump space needed for your machine. (Estimation can differ at times with high load, as kernel space is higher at that time.)

When a system dump is occurring, the dump image is not written to disk in mirrored form. A dump to a mirrored lv results in an inconsistent dump and therefore, should be avoided. The logic behind this fact is that if the mirroring code itself were the cause of the system crash, then trusting the same code to handle the mirrored write would be pointless. Thus, mirroring a dump device is a waste of resources and is not recommended.

Since the default dump device is the primary paging lv, you should create a separate dump lv, if you mirror your paging lv (which is suggested.)If a valid secondary dump device exists and the primary dump device cannot be reached, the secondary dump device will accept the dump information intended for the primary dump device.

IBM recommendation:

All I can recommend you is to force a dump the next time the problem should occur. This will enable us to check which process was hanging or what caused the system to not respond any more. You can do this via the HMC using the following steps:

Operations -> Restart -> Dump

As a general recommendation you should always force a dump if a system is hanging. There are only very few cases in which we can determine the reason for a hanging system without having a dump available for analysis.

-------------------------------------------

Traditional vs Firmware-assisted dump:

Up to POWER5 only traditioanl dumps were available, and the introduction of the POWER6 processor-based systems allowed system dumps to be firmware assisted. When performing a firmware-assisted dump, system memory is frozen and the partition rebooted, which allows a new instance of the operating system to complete the dump.

Traditional dump: it is generated before partition is rebooted.

(When system crashed, memory content is trying to be copied at that moment to dump device)

Firmware-assisted dump: it takes place when the partition is restarting.

(When system crashed, memory is frozen, and by hypervisor (firmware) new memory space is allocated in RAM, and the contents of memory is copied there. Then during reboot it is copied from this new memory area to the dump device.)

Firmware-assisted dump offers improved reliability over the traditional dump, by rebooting the partition and using a new kernel to dump data from the previous kernel crash.

When an administrator attempts to switch from a traditional to firmware-assisted system dump, system memory is checked against the firmware-assisted system dump memory requirements. If these memory requirements are not met, then the "sysdumpdev -t" command output reports the required minimum system memory to allow for firmware-assisted dump to be configured. Changing from traditional to firmware-assisted dump requires a reboot of the partition for the dump changes to take effect.

Firmware-assisted system dumps can be one of these types:

Selective memory dump: Selective memory dumps are triggered by or use of AIX instances that must be dumped.

Full memory dump: The whole partition memory is dumped without any interaction with an AIX instance that is failing.

-------------------------------------------

Use the sysdumpdev command to query or change the primary or secondary dump devices.

- Primary: usually used when you wish to save the dump data

- Secondary: can be used to discard dump data (that is, /dev/sysdumpnull)

Flags for sysdumpdev command:

-l list the current dump destination

-e estimates the size of the dump (in bytes)

-p primary

-s secondary

-P make change permanent

-C turns on compression

-c turns off compression

-L shows info about last dump

-K turns on: alway allow system dump

sysdumpdev -P -p /dev/dumpdev change the primary dumpdevice permanently to /dev/dumpdev

root@aix1: /root # sysdumpdev -l

primary /dev/dumplv

secondary /dev/sysdumpnull

copy directory /var/adm/ras

forced copy flag TRUE

always allow dump TRUE <--if it is on FALSE then in smitty sysdumpdev it can be change

dump compression ON <--if it is on OFF then sysdumpdev -C changes it to ON-ra (-c changes it to OFF)

Other commands:

sysdumpstart starts a dump (smitty dump)(it will do a reboot as well)

kdb it analysis the dump

/usr/lib/ras/dumpcheck checks if dump device and copy directory are able to receive the system dump

If dump device is a paging space, it verifies if enough free space exists in the copy dir to hold the dump

If dump device is a logical volume, it verifies it is large enough to hold a dump

(man dumpcheck)

-------------------------------------------

Creating a dump device

1. sysdumpdev -e <--shows an estimation, how much space is required for a dump

2. mklv -t sysdump -y lg_dumplv rootvg 3 hdisk0 <--it creates a sysdump lv with 3 PPs

3. sysdumpdev -Pp /dev/lg_dumplv <--making it as a primary device (system will use this lv now for dumps)

-------------------------------------------

System dump initiaded by a user

!!!reboot will take place automatically!!!

1. sysdumpstart -p <--initiates a dump to the primary device

(Reboot will be done automatically)

(If a dedicated dump device is used, user initiated dumps are not copied automatically to copy directory.)

(If paging space is used for dump, then dump will be copied automatically to /var/adm/ras)

2. sysdumpdev -L <--shows dump took place on the primary device, time, size ... (errpt will show as well)

3. savecore -d /var/adm/ras <--copy last dump from system dump device to directory /var/adm/ras (if paging space is used this is not needed)

-------------------------------------------

How to move dumplv to another disk:

We want to move from hdisk1 to hdisk0:

1. lslv -l dumplv <--checking which disk

2. sysdumpdev -l <--checking sysdump device (primary was here /dev/dumplv)

3. sysdumpdev -Pp /dev/sysdumpnull <--changing primary to sysdumpnull (secondary, it is a null device) (lsvg -l roovg shows closed state)

4. migratepv -l dumplv hdisk1 hdisk0 <--moving it from hdisk1 to hdisk0

5. sysdumpdev -Pp /dev/dumplv <--changing back to the primary device

-------------------------------------------

The largest dump device is too small: (LABEL: DMPCHK_TOOSMALL IDENT)

1. Dumpcheck runs from crontab

# crontab -l | grep dump

0 15 * * * /usr/lib/ras/dumpcheck >/dev/null 2>&1

2. Check if there are any errors:

# errpt

IDENTIFIER TIMESTAMP T C RESOURCE_NAME DESCRIPTION

E87EF1BE 0703150008 P O dumpcheck The largest dump device is too small.

E87EF1BE 0702150008 P O dumpcheck The largest dump device is too small.

3. If you find new error message, find dumplv:

# sysdumpdev -l

primary /dev/dumplv

secondary /dev/sysdumpnull

copy directory /var/adm/ras

forced copy flag TRUE

always allow dump TRUE

dump compression ON

List dumplv form rootvg:

# lsvg -l rootvg|grep dumplv

dumplv dump 8 8 1 open/syncd N/A

4. Extend with 1 PP

# extendlv dumplv 1

dumplv dump 9 9 1 open/syncd N/A

Run problem check at the end

OK -> done

Not OK -> Extend with 1 PP again.

-------------------------------------------

changing the autorestart attribute of the systemdump:

(smitty chgsys as well)

1.lsattr -El sys0 -a autorestart

autorestart true Automatically REBOOT system after a crash True

2.chdev -l sys0 -a autorestart=false

sys0 changed

----------------------------------------------

CORE FILE:

errpt shows which program, if not:

- use the “strings” command (for example: ”strings core | grep _=”)

- or the lquerypv command: (for example: “lquerypv -h core 6b0 64”)

man syscorepath

syscorepath -p /tmp

syscorepath -g

AIX generates a system dump when a severe error occurs. A system dump creates a picture of the system's memory contents. If the AIX kernel crashes kernel data is written to the primary dump device. After a kernel crash AIX must be rebooted. During the next boot, the dump is copied into a dump directory (default is /var/adm/ras). The dump file name is vmcore.x (x indicates a number, e.g. vmcore.0)

When installing the operating system, the dump device is automatically configured. By default, the primary device is /dev/hd6, which is a paging logical volume, and the secondary device is /dev/sysdumpnull.

A rule of thumb is when a dump is created, it is about 1/4 of the size of real memory. The command "sysdumpdev -e" will also provide an estimate of the dump space needed for your machine. (Estimation can differ at times with high load, as kernel space is higher at that time.)

When a system dump is occurring, the dump image is not written to disk in mirrored form. A dump to a mirrored lv results in an inconsistent dump and therefore, should be avoided. The logic behind this fact is that if the mirroring code itself were the cause of the system crash, then trusting the same code to handle the mirrored write would be pointless. Thus, mirroring a dump device is a waste of resources and is not recommended.

Since the default dump device is the primary paging lv, you should create a separate dump lv, if you mirror your paging lv (which is suggested.)If a valid secondary dump device exists and the primary dump device cannot be reached, the secondary dump device will accept the dump information intended for the primary dump device.

IBM recommendation:

All I can recommend you is to force a dump the next time the problem should occur. This will enable us to check which process was hanging or what caused the system to not respond any more. You can do this via the HMC using the following steps:

Operations -> Restart -> Dump

As a general recommendation you should always force a dump if a system is hanging. There are only very few cases in which we can determine the reason for a hanging system without having a dump available for analysis.

Traditional vs Firmware-assisted dump:

Up to POWER5 only traditioanl dumps were available, and the introduction of the POWER6 processor-based systems allowed system dumps to be firmware assisted. When performing a firmware-assisted dump, system memory is frozen and the partition rebooted, which allows a new instance of the operating system to complete the dump.

Traditional dump: it is generated before partition is rebooted.

(When system crashed, memory content is trying to be copied at that moment to dump device)

Firmware-assisted dump: it takes place when the partition is restarting.

(When system crashed, memory is frozen, and by hypervisor (firmware) new memory space is allocated in RAM, and the contents of memory is copied there. Then during reboot it is copied from this new memory area to the dump device.)

Firmware-assisted dump offers improved reliability over the traditional dump, by rebooting the partition and using a new kernel to dump data from the previous kernel crash.

When an administrator attempts to switch from a traditional to firmware-assisted system dump, system memory is checked against the firmware-assisted system dump memory requirements. If these memory requirements are not met, then the "sysdumpdev -t" command output reports the required minimum system memory to allow for firmware-assisted dump to be configured. Changing from traditional to firmware-assisted dump requires a reboot of the partition for the dump changes to take effect.

Firmware-assisted system dumps can be one of these types:

Selective memory dump: Selective memory dumps are triggered by or use of AIX instances that must be dumped.

Full memory dump: The whole partition memory is dumped without any interaction with an AIX instance that is failing.

-------------------------------------------

Use the sysdumpdev command to query or change the primary or secondary dump devices.

- Primary: usually used when you wish to save the dump data

- Secondary: can be used to discard dump data (that is, /dev/sysdumpnull)

Flags for sysdumpdev command:

-l list the current dump destination

-e estimates the size of the dump (in bytes)

-p primary

-s secondary

-P make change permanent

-C turns on compression

-c turns off compression

-L shows info about last dump

-K turns on: alway allow system dump

sysdumpdev -P -p /dev/dumpdev change the primary dumpdevice permanently to /dev/dumpdev

root@aix1: /root # sysdumpdev -l

primary /dev/dumplv

secondary /dev/sysdumpnull

copy directory /var/adm/ras

forced copy flag TRUE

always allow dump TRUE <--if it is on FALSE then in smitty sysdumpdev it can be change

dump compression ON <--if it is on OFF then sysdumpdev -C changes it to ON-ra (-c changes it to OFF)

Other commands:

sysdumpstart starts a dump (smitty dump)(it will do a reboot as well)

kdb it analysis the dump

/usr/lib/ras/dumpcheck checks if dump device and copy directory are able to receive the system dump

If dump device is a paging space, it verifies if enough free space exists in the copy dir to hold the dump

If dump device is a logical volume, it verifies it is large enough to hold a dump

(man dumpcheck)

-------------------------------------------

Creating a dump device

1. sysdumpdev -e <--shows an estimation, how much space is required for a dump

2. mklv -t sysdump -y lg_dumplv rootvg 3 hdisk0 <--it creates a sysdump lv with 3 PPs

3. sysdumpdev -Pp /dev/lg_dumplv <--making it as a primary device (system will use this lv now for dumps)

-------------------------------------------

System dump initiaded by a user

!!!reboot will take place automatically!!!

1. sysdumpstart -p <--initiates a dump to the primary device

(Reboot will be done automatically)

(If a dedicated dump device is used, user initiated dumps are not copied automatically to copy directory.)

(If paging space is used for dump, then dump will be copied automatically to /var/adm/ras)

2. sysdumpdev -L <--shows dump took place on the primary device, time, size ... (errpt will show as well)

3. savecore -d /var/adm/ras <--copy last dump from system dump device to directory /var/adm/ras (if paging space is used this is not needed)

-------------------------------------------

How to move dumplv to another disk:

We want to move from hdisk1 to hdisk0:

1. lslv -l dumplv <--checking which disk

2. sysdumpdev -l <--checking sysdump device (primary was here /dev/dumplv)

3. sysdumpdev -Pp /dev/sysdumpnull <--changing primary to sysdumpnull (secondary, it is a null device) (lsvg -l roovg shows closed state)

4. migratepv -l dumplv hdisk1 hdisk0 <--moving it from hdisk1 to hdisk0

5. sysdumpdev -Pp /dev/dumplv <--changing back to the primary device

-------------------------------------------

The largest dump device is too small: (LABEL: DMPCHK_TOOSMALL IDENT)

1. Dumpcheck runs from crontab

# crontab -l | grep dump

0 15 * * * /usr/lib/ras/dumpcheck >/dev/null 2>&1

2. Check if there are any errors:

# errpt

IDENTIFIER TIMESTAMP T C RESOURCE_NAME DESCRIPTION

E87EF1BE 0703150008 P O dumpcheck The largest dump device is too small.

E87EF1BE 0702150008 P O dumpcheck The largest dump device is too small.

3. If you find new error message, find dumplv:

# sysdumpdev -l

primary /dev/dumplv

secondary /dev/sysdumpnull

copy directory /var/adm/ras

forced copy flag TRUE

always allow dump TRUE

dump compression ON

List dumplv form rootvg:

# lsvg -l rootvg|grep dumplv

dumplv dump 8 8 1 open/syncd N/A

4. Extend with 1 PP

# extendlv dumplv 1

dumplv dump 9 9 1 open/syncd N/A

Run problem check at the end

OK -> done

Not OK -> Extend with 1 PP again.

-------------------------------------------

changing the autorestart attribute of the systemdump:

(smitty chgsys as well)

1.lsattr -El sys0 -a autorestart

autorestart true Automatically REBOOT system after a crash True

2.chdev -l sys0 -a autorestart=false

sys0 changed

----------------------------------------------

CORE FILE:

errpt shows which program, if not:

- use the “strings” command (for example: ”strings core | grep _=”)

- or the lquerypv command: (for example: “lquerypv -h core 6b0 64”)

man syscorepath

syscorepath -p /tmp

syscorepath -g

Devices

Devices

CEC - Central Electronic Complex

it generally refers to a discrete portion of the system containing CPU, RAM, etc. the "IO Drawer" would be a separate unit.

backplane is similar to a motherboard, except motherboard has CPU as well, but a backplane does not have a CPU (on IBM servers we have processor cards). Sysplanar is the same as backplane or "motherboard". sys0 is the AIX kernel device of the system planar.

In order to attach devices (like printer...) to an AIX system, we must tell AIX the characteristics of these devices so the OS can send correct signals to the device.

Physical Devices Actual hardware that is connected in some way to the system.

Ports The physical connectors/adapters in the system where physical devices are attached.

Device Drivers Software in the kernel that controls the activity on a port. (format of the data that is sent to the device)

/dev A directory of the logical devices that can be directly accessed by the user.

(Some of the logical devices are only referenced in the ODM and cannot be accessed by users).

Logical Devices Software interfaces (special files) that present a means of accessing a physical device. (to users and programs)

Data transferrred to logical devices will be sent to the appropriate device driver.

Data read from logical devices will be read from the appropriate device driver.

Devices can be one of two types:

- Block device is a structured random access device. Buffering is used to provide a block-at-a-time method of access.

- Character (raw) device is a sequential, stream-oriented device which provides no buffering.

Most block devices also have an equivalent character device. For example, /dev/hd1 provides buffered access to a logical volume whereas /dev/rhd1 provides raw access to the same logical volume.(The raw devices are usually accessed by the kernel.)

----------------------------------

Major and Minor device numbers:

maj.min dev nums

brw------- 1 root system 32,8192 Nov 03 14:19 hdisk3

brw------- 1 root system 32,8194 Nov 03 14:19 hdisk4

brw------- 1 root system 32,8195 Nov 07 07:08 hdisk5

It means: here we have a major device which is 32 and there are minor devices for this major device, which are: 8192,...

More precisely, the major number refers to the software section of code in the kernel which handles that type of device, and the minor number to the particular device of that type.

----------------------------------

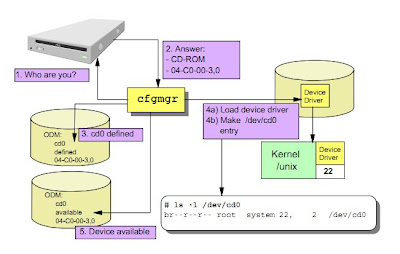

cfgmgr - mkdev/rmdev:

The Configuration Manager (cfgmgr) is a program that automatically configures devices on your system during system boot and run time. The Configuration Manager uses the information from the predefined and customized databases during this process, and updates the customized database afterwards.

mkdev either creates an entry in the customized database when configuring a device or moves a device from defined to available. When defined, there is an entry in the customized database already. To move to the available state means the device driver is loaded into the kernel.

rmdev changes device states going in the opposite direction. rmdev without the -d option is used to take a device from the available to the defined state (leaving the entry in the customized database but unloading the device driver). When using the -d option, rmdev removes the device from the customized database.

----------------------------------

LOCATION CODES:

The location code is another way of identifying the physical device. The format is: AB-CD-EF-GH.

Devices with a location code are physical devices. Devices without a location code are logical devices.

physical location code: lscfg | grep hdisk0

AIX location code: lsdev -Cc disk

Both location codes: lsdev -Cc adapter -F "name status physloc location description"

----------------------------------

FRU, VPD:

FRU (Field Replaceable Unit) is the number used by IBM to identify the devices. (sometimes it is called 'Part Number') These are needed forhardware replacement.

VPD (Vital Product Data) is the basic data (infos) about the device which is stored in the EEPROM of the device and it can be read by the OS. It can be presented by the command 'lscfg' and it contains the FRU as well.

lsdev shows information about devices from ODM (-C Customized Devices, -P Predefined Devices

lscfg display vital product data (VPD) such as part numbers, serial numbers...

lsattr display attributes and possible values (the information is obtained from the Configuration database (ODM?), not the device)

----------------------------------

INFO ABOUT THE DEVICES:

prtconf displays system configuration information

lsdev -PH lists supported (predefined) devices (-H shows the header above the column; lsdev -Pc adapter -H)

lsdev -CH lists currently defined and configured devices (lsdev -Cc adapter -H)

lsdev -CHF "name status physloc location description" this will show physical location code and AIX location as well

(Devices with a location code are physical devices. Diveces without a location code are logical devices.)

lsdev -p fscsi0 displays the child devices of the given parent device (in this case the disks of the adapter)

lsdev -l hdisk0 -F parent displays the parent device of the given child device (in this case the adapter of the disk)

(it is the same: lsparent -Cl hdisk0

mkdev -l hdisk1 put from defined into available state (or creates an entry in customized db if there wasn't any)

rmdev -l hdisk1 put from available into defined state

rmdev -dl hdisk1 permanently remove an available or defined device

rendev -l hdisk5 -n hdisk16 it changes the name of the device (as long as it is not in a vg (in AIX 6.1 TL6))

odmget -q parent=pci0 CuDv shows child devices of pci0 (in the output look for the "name" lines)

odmget -q name=pci0 CuDv shows parent of pci0 (in the output look for the "parent" line)

lscfg -v displays characteristics for all devices

lscfg -vl ent1 displays characteristics for the specified device (-l: logical device name)

lscfg -l ent* displays all ent devices (it can be fcs*, fscsi*, scsi*...)

lsattr -El ent1 displays attributes for devices (-l: logical device name, -E:effective attr.)

lsattr -Rl fcs0 -a init_link displays what values can be given to an attribute

odmget PdAt |grep -p reserve_policy displays what values can be given to an attribute

lsslot -c pci lists hot plug pci slots (physical)

lsslot -c slot lists logical slots

lsslot -c phb lists logical slots (PCI Host Bridge)

cfgmgr configures devices and installs device software (no need to use mdev, rmdev)

cfgmgr -v detailed output of cfgmgr

cfgmgr -l fcsX configure detected devices attached to fcsX (it configures child devices as well)

cfgmgr -i /tmp/drivers to install drivers which is in /tmp/drivers automatically during configuration

smitty devices

---------------------------

Changing state of a device:

1.lsdev -Cc tape in normal case it shows: rmt0 Available ...

2.rmdev -l rmt0 it will show: rmt0 Defined

3.rmdev -dl rmt0 it will show: rmt0 deleted (the device configuration is unloaded from the ODM)

Now rmt0 is completely removed. To redetect the device:

4.cfgmgr after cfgmgr, the lsdev -Cc tape command shows: rmt0 Available ...

---------------------------

If rmdev does not work because: "... specified device is busy":

1. lsdev -C| grep fcs1 <--check the location (in this case it is 07-00)

2. lsdev -C| grep 07-00 <--check all the devices in this location

3. this will show which process is locking the device

for i in `lsdev -C| grep 07-00 | awk '{print $1}'`; do

fuser /dev/$i

done

CEC - Central Electronic Complex

it generally refers to a discrete portion of the system containing CPU, RAM, etc. the "IO Drawer" would be a separate unit.

backplane is similar to a motherboard, except motherboard has CPU as well, but a backplane does not have a CPU (on IBM servers we have processor cards). Sysplanar is the same as backplane or "motherboard". sys0 is the AIX kernel device of the system planar.

In order to attach devices (like printer...) to an AIX system, we must tell AIX the characteristics of these devices so the OS can send correct signals to the device.

Physical Devices Actual hardware that is connected in some way to the system.

Ports The physical connectors/adapters in the system where physical devices are attached.

Device Drivers Software in the kernel that controls the activity on a port. (format of the data that is sent to the device)

/dev A directory of the logical devices that can be directly accessed by the user.

(Some of the logical devices are only referenced in the ODM and cannot be accessed by users).

Logical Devices Software interfaces (special files) that present a means of accessing a physical device. (to users and programs)

Data transferrred to logical devices will be sent to the appropriate device driver.

Data read from logical devices will be read from the appropriate device driver.

Devices can be one of two types:

- Block device is a structured random access device. Buffering is used to provide a block-at-a-time method of access.

- Character (raw) device is a sequential, stream-oriented device which provides no buffering.

Most block devices also have an equivalent character device. For example, /dev/hd1 provides buffered access to a logical volume whereas /dev/rhd1 provides raw access to the same logical volume.(The raw devices are usually accessed by the kernel.)

----------------------------------

Major and Minor device numbers:

maj.min dev nums

brw------- 1 root system 32,8192 Nov 03 14:19 hdisk3

brw------- 1 root system 32,8194 Nov 03 14:19 hdisk4

brw------- 1 root system 32,8195 Nov 07 07:08 hdisk5

It means: here we have a major device which is 32 and there are minor devices for this major device, which are: 8192,...

More precisely, the major number refers to the software section of code in the kernel which handles that type of device, and the minor number to the particular device of that type.

----------------------------------

cfgmgr - mkdev/rmdev:

The Configuration Manager (cfgmgr) is a program that automatically configures devices on your system during system boot and run time. The Configuration Manager uses the information from the predefined and customized databases during this process, and updates the customized database afterwards.

mkdev either creates an entry in the customized database when configuring a device or moves a device from defined to available. When defined, there is an entry in the customized database already. To move to the available state means the device driver is loaded into the kernel.

rmdev changes device states going in the opposite direction. rmdev without the -d option is used to take a device from the available to the defined state (leaving the entry in the customized database but unloading the device driver). When using the -d option, rmdev removes the device from the customized database.

----------------------------------

LOCATION CODES:

The location code is another way of identifying the physical device. The format is: AB-CD-EF-GH.

Devices with a location code are physical devices. Devices without a location code are logical devices.

physical location code: lscfg | grep hdisk0

AIX location code: lsdev -Cc disk

Both location codes: lsdev -Cc adapter -F "name status physloc location description"

----------------------------------

FRU, VPD:

FRU (Field Replaceable Unit) is the number used by IBM to identify the devices. (sometimes it is called 'Part Number') These are needed forhardware replacement.

VPD (Vital Product Data) is the basic data (infos) about the device which is stored in the EEPROM of the device and it can be read by the OS. It can be presented by the command 'lscfg' and it contains the FRU as well.

lsdev shows information about devices from ODM (-C Customized Devices, -P Predefined Devices

lscfg display vital product data (VPD) such as part numbers, serial numbers...

lsattr display attributes and possible values (the information is obtained from the Configuration database (ODM?), not the device)

----------------------------------

INFO ABOUT THE DEVICES:

prtconf displays system configuration information

lsdev -PH lists supported (predefined) devices (-H shows the header above the column; lsdev -Pc adapter -H)

lsdev -CH lists currently defined and configured devices (lsdev -Cc adapter -H)

lsdev -CHF "name status physloc location description" this will show physical location code and AIX location as well

(Devices with a location code are physical devices. Diveces without a location code are logical devices.)

lsdev -p fscsi0 displays the child devices of the given parent device (in this case the disks of the adapter)

lsdev -l hdisk0 -F parent displays the parent device of the given child device (in this case the adapter of the disk)

(it is the same: lsparent -Cl hdisk0

mkdev -l hdisk1 put from defined into available state (or creates an entry in customized db if there wasn't any)

rmdev -l hdisk1 put from available into defined state

rmdev -dl hdisk1 permanently remove an available or defined device

rendev -l hdisk5 -n hdisk16 it changes the name of the device (as long as it is not in a vg (in AIX 6.1 TL6))

odmget -q parent=pci0 CuDv shows child devices of pci0 (in the output look for the "name" lines)

odmget -q name=pci0 CuDv shows parent of pci0 (in the output look for the "parent" line)

lscfg -v displays characteristics for all devices

lscfg -vl ent1 displays characteristics for the specified device (-l: logical device name)

lscfg -l ent* displays all ent devices (it can be fcs*, fscsi*, scsi*...)

lsattr -El ent1 displays attributes for devices (-l: logical device name, -E:effective attr.)

lsattr -Rl fcs0 -a init_link displays what values can be given to an attribute

odmget PdAt |grep -p reserve_policy displays what values can be given to an attribute

lsslot -c pci lists hot plug pci slots (physical)

lsslot -c slot lists logical slots

lsslot -c phb lists logical slots (PCI Host Bridge)

cfgmgr configures devices and installs device software (no need to use mdev, rmdev)

cfgmgr -v detailed output of cfgmgr

cfgmgr -l fcsX configure detected devices attached to fcsX (it configures child devices as well)

cfgmgr -i /tmp/drivers to install drivers which is in /tmp/drivers automatically during configuration

smitty devices

---------------------------

Changing state of a device:

1.lsdev -Cc tape in normal case it shows: rmt0 Available ...

2.rmdev -l rmt0 it will show: rmt0 Defined

3.rmdev -dl rmt0 it will show: rmt0 deleted (the device configuration is unloaded from the ODM)

Now rmt0 is completely removed. To redetect the device:

4.cfgmgr after cfgmgr, the lsdev -Cc tape command shows: rmt0 Available ...

---------------------------

If rmdev does not work because: "... specified device is busy":

1. lsdev -C| grep fcs1 <--check the location (in this case it is 07-00)

2. lsdev -C| grep 07-00 <--check all the devices in this location

3. this will show which process is locking the device

for i in `lsdev -C| grep 07-00 | awk '{print $1}'`; do

fuser /dev/$i

done

Time Synchronization, Timed NTPD, Set Clock

date - time

Time synchronization:timed, ntpd, setclock

ntp is considered superior to timed.

NTP:

ntpd is a daemon that runs to keep your time up to date/time. For this you must configure ntp.conf so it will know where to get the date/time.

ntpdate is a command that will use ntp.conf to do an update now as opposed to waiting for ntpd to do it.

Translation: You want ntpd, you need to configure ntp.conf. You CAN use ntpdate to do manual updates.

should put this line to /etc/ntp.conf:

server timeserver.domain.com

driftfile /etc/ntp.drift

tracefile /etc/ntp.trace

...

...

Date change:

1. stopsrc -s xntpd

2. ntpdate <ntp server> <--ntpdate command will decline if xntpd is running

4 Oct 14:20:07 ntpdate[491628]: step time server 200.200.10.100 offset -32.293912 sec

<<3. smit date>>

4. vi /etc/rc.tcpip

5. uncomment this line: start /usr/sbin/xntpd "$src_running"

6. startsrc -s xntpd

---------------------

Configuring NTP:

ON SERVER:

1. lssrc -ls xntpd <--Sys peer should show a valid server or 127.127.1.0.

If the server is "insane":

2. vi /etc/ntp.conf:

Add:

server 127.127.1.0

Double check that "broadcast client" is commented out.

stopsrc -s xntpd

startsrc -s xntpd

If it is a DB server, use the -x flag to prevent the clock from changing in a negative direction.

Enter the following:startsrc -s xntpd -a "-x"

3. lssrc -ls xntpd <--check if the server is synched. It can take up 12 minutes

ON CLIENT:

1. ntpdate -d ip.address.of.server <--verify there is a suitable server for synch

The offset must be less than 1000 seconds for xntpd to synch.

If the offset is greater than 1000 seconds, change the time manually on the client and run the ntpdate -d again.

If you get the message, "no server suitable for synchronization found", verify xntpd is running on the server (see above) and that no firewalls are blocking port 123.

2. vi /etc/ntp.conf <--specify your xntp server in /etc/ntp.conf, enter:

(Comment out the "broadcastclient" line and add server ip.address.of.server prefer.)

Leave the driftfile and tracefile at their defaults.

3. startsrc -s xntpd <--start the xntpd daemon:

(Use the -x flag if it is appropriate for your environment.)